For this project, we will be comparing the WaWiCo sensor with a conventional hall-effect mechanical flow meter. The WaWiCo sensor introduces a novel method for water metering, with non-invasive acoustic analysis. The benefit of the WaWiCo method is evident during the mechanical flow meter analysis, where we need to match pipe diameters and fittings and ensure that the flow terminates at a point. Otherwise, mechanical meters require cutting in piping — which is not an option for many users. Using a Raspberry Pi computer and a WaWiCo USB water meter kit, the frequency content of water flow for a given pipe is analyzed. Additionally, this frequency response will be used to correlate to the flow rate (in L/s) approximated by the mechanical flow meter. This brings us one step closer to being able to non-invasively measure water flow using the WaWiCo method.

Read MoreThis is the third tutorial in a series dedicated to exploring the Raspberry Pi Foundation's groundbreaking new microcontroller: the Raspberry Pi Pico. The first entry centered on the basic principles of interfacing with the Pico and programming with Thonny and MicroPython, while the second entry focused on emulating the Google Home and Amazon Alexa LED animations with a WS2812 RGB LED array. In this tutorial, an SSD1306 organic light emitting diode (OLED) display will be controlled using the Pico microcontroller. A MicroPython library will be used as the base class for interfacing with the SSD1306, while custom algorithms are introduced to create data displays. Additionally, a custom Python3 algorithm will be given that allows users to show a custom image on the display. Lastly, a real-time plot will be created that shows an audio signal outputted by a MEMS microphone, emulating a real-time graph display. The SSD1306 is a useful tool for smaller scale projects that require real-time data displays, control feedback, and IoT testing. The power of the Pico microcontroller makes interfacing with the SSD1306 fast and easy, which will be evident when working with the Pico and SSD1306.

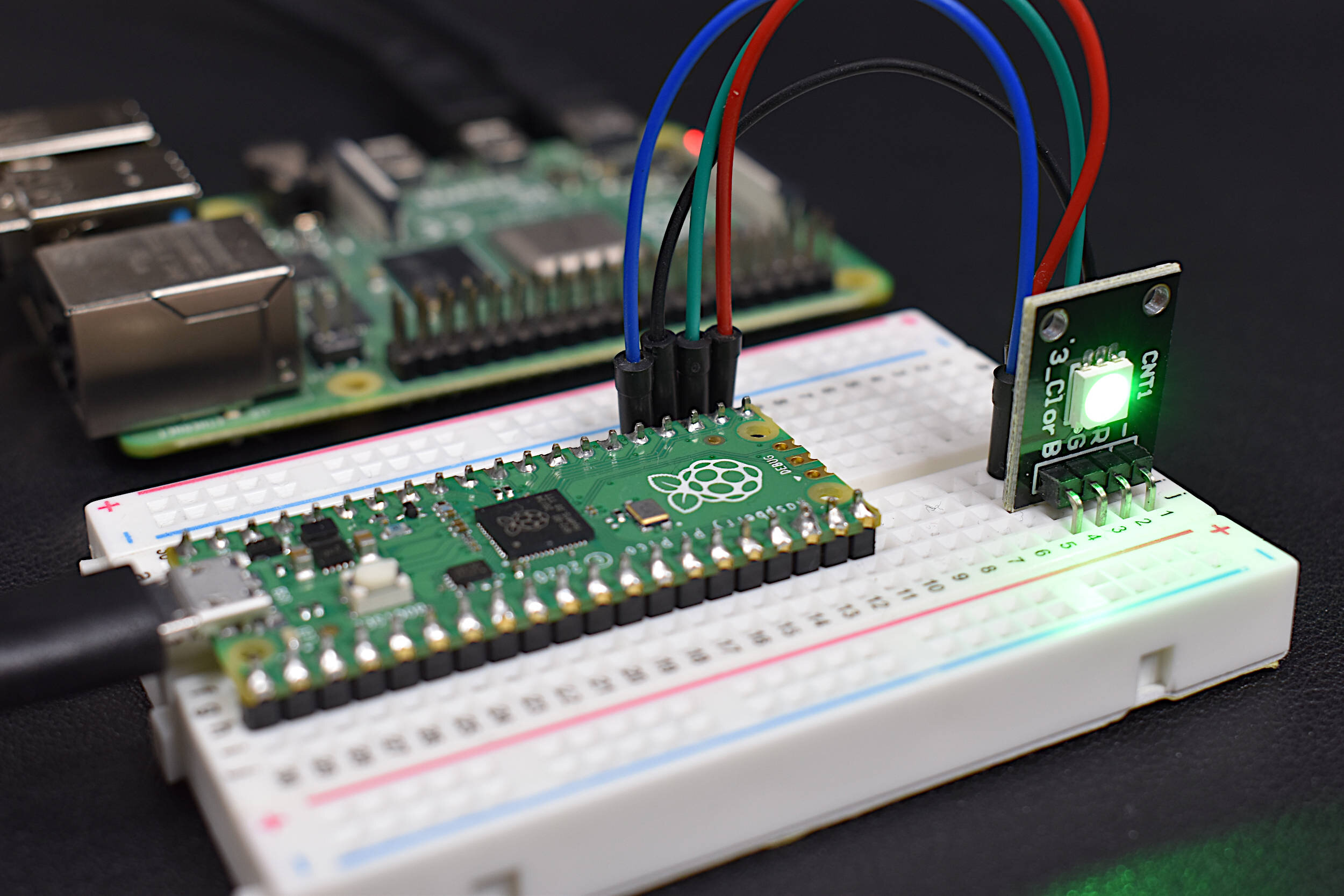

Read MoreThis is the second entry into the Raspberry Pi Pico tutorial series dedicated to exploring the capabilities of the Raspberry Pi Foundation's groundbreaking new Pico microcontroller. A WS2812 RGB LED is controlled via the programmable I/O system (PIO) on the Pico microcontroller. The code and methods used to control the WS2812 are based on Raspberry Pi Pico Micropython SDK the project entitled "Using PIO to drive a set of NeoPixel Ring (WS2812 LEDs)." A state machine is used on the Pico to control the WS2812 LED array, which allows users to test a range of algorithms that affect the ring light. The light mappings will subsequently be capable of emulating the LED effects similar to those demonstrated by the Amazon Alexa or Google Home devices. A universal wiring diagram is given that allows for any number of LEDs to be wired to the Pico, which we tested up to 60 LEDs.

Read MoreA new type of water meter produced by Water Wise Controls (WaWiCo) introduces a novel method for water metering: non-invasive acoustic analysis. Their USB water metering kit allows users to listen to their pipes without the need for plumbing work. In this tutorial, the acoustic profile of a piping system will be explored using a Raspberry Pi computer, the Python programming language, and a WaWiCo USB water meter kit. The resulting analysis will allow users to identify the acoustic profile of their piping system and determine when water is flowing. This is the first of a series of entries into non-invasive water metering from WaWiCo, where open-source technologies will be used to characterize a piping system based on the acoustic profile of a user's home or apartment.

Read MoreThe Raspberry Pi Pico was recently released by the Raspberry Pi Foundation as a competitive microcontroller in the open-source electronics sphere. The Pico shares many of the capabilities of common Arduino boards including: analog-to-digital conversion (12-bit ADC), UART, SPI, I2C, PWM, among others. The board is just 21mm x 51mm in size, making it ideal for applications that require low-profile designs. One of the innovations of the Pico is the dual-core processor, which permits multiprocessing at clock rates up to 133 MHz. One particular draw of the Pico is its compatibility with MicroPython, which is chosen as the programming tool for this project. The focus on MicroPython, as opposed to C/C++, minimizes the confusion and time required to get started with the Pico. A Raspberry Pi 4 computer is ideal for interfacing with the Pico, which can be used to prepare, debug, and program the Pico. From start to finish - this tutorial helps users run their first custom MicroPython script on the Pico in just a few minutes. An RGB LED will be used to demonstrate general purpose input/output of the Pico microcontroller.

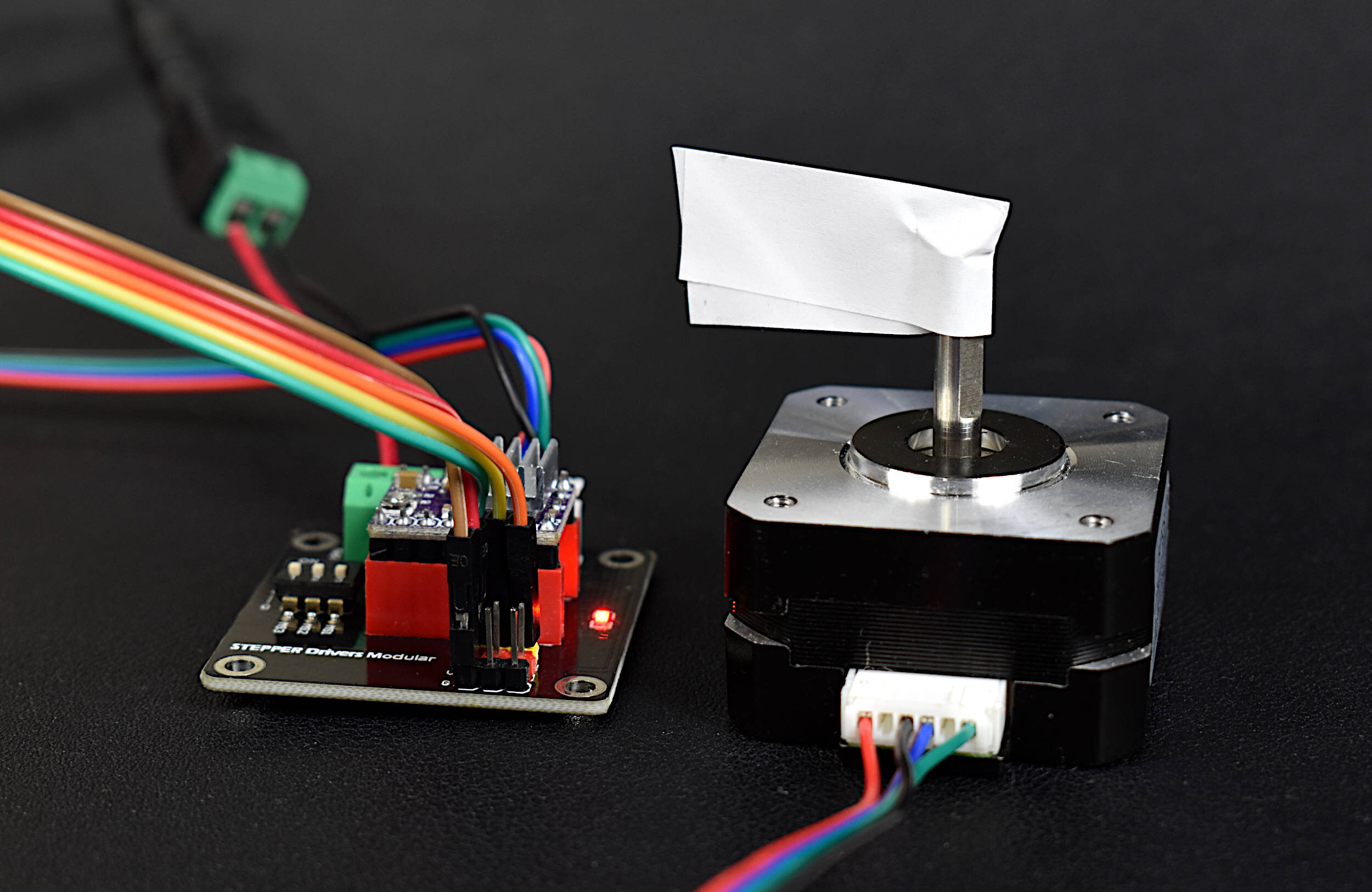

Read MoreThe NEMA 17 is a widely used class of stepper motor used in 3D printers, CNC machines, linear actuators, and other precision engineering applications where accuracy and stability are essential. The NEMA-17HS4023 is introduced here, which is a version of the NEMA 17 that has dimensions 42mm x 42mm x 23mm (Length x Width x Height). In this tutorial, the stepper motor is controlled by a DRV8825 driver wired to a Raspberry Pi 4 computer. The Raspberry Pi uses Python to control the motor using an open-source motor library. The wiring and interfacing between the NEMA 17 and Raspberry Pi is given, with an emphasis on the basics of stepper motors. The DRV8825 control parameters in the Python stepper library are broken down to educate users on how the varying of each parameter impacts the behavior of the NEMA 17. Simple characteristics of stepper control are explored: stepper directivity (clockwise and counterclockwise), step incrementing (full step, half step, micro-stepping, etc.), and step delay.

Read MoreThe TF-Luna is an 850nm Light Detection And Ranging (LiDAR) module developed by Benewake that uses the time-of-flight (ToF) principle to detect objects within the field of view of the sensor. The TF-Luna is capable of measuring objects 20cm - 8m away, depending on the ambient light conditions and surface reflectivity of the object(s) being measured. A vertical cavity surface emitting laser (VCSEL) is at the center of the TF-Luna, which is categorized as a Class 1 laser, making it very safe for nearly all applications [read about laser classification here]. The TF-Luna has a selectable sample rate from 1Hz - 250Hz, making it ideal for more rapid distance detection scenarios. In this tutorial, the TF-Luna is wired to a Raspberry Pi 4 computer via the mini UART serial port and powered using the 5V pin. Python will be used to configure and test the LiDAR module, with specific examples and use cases.

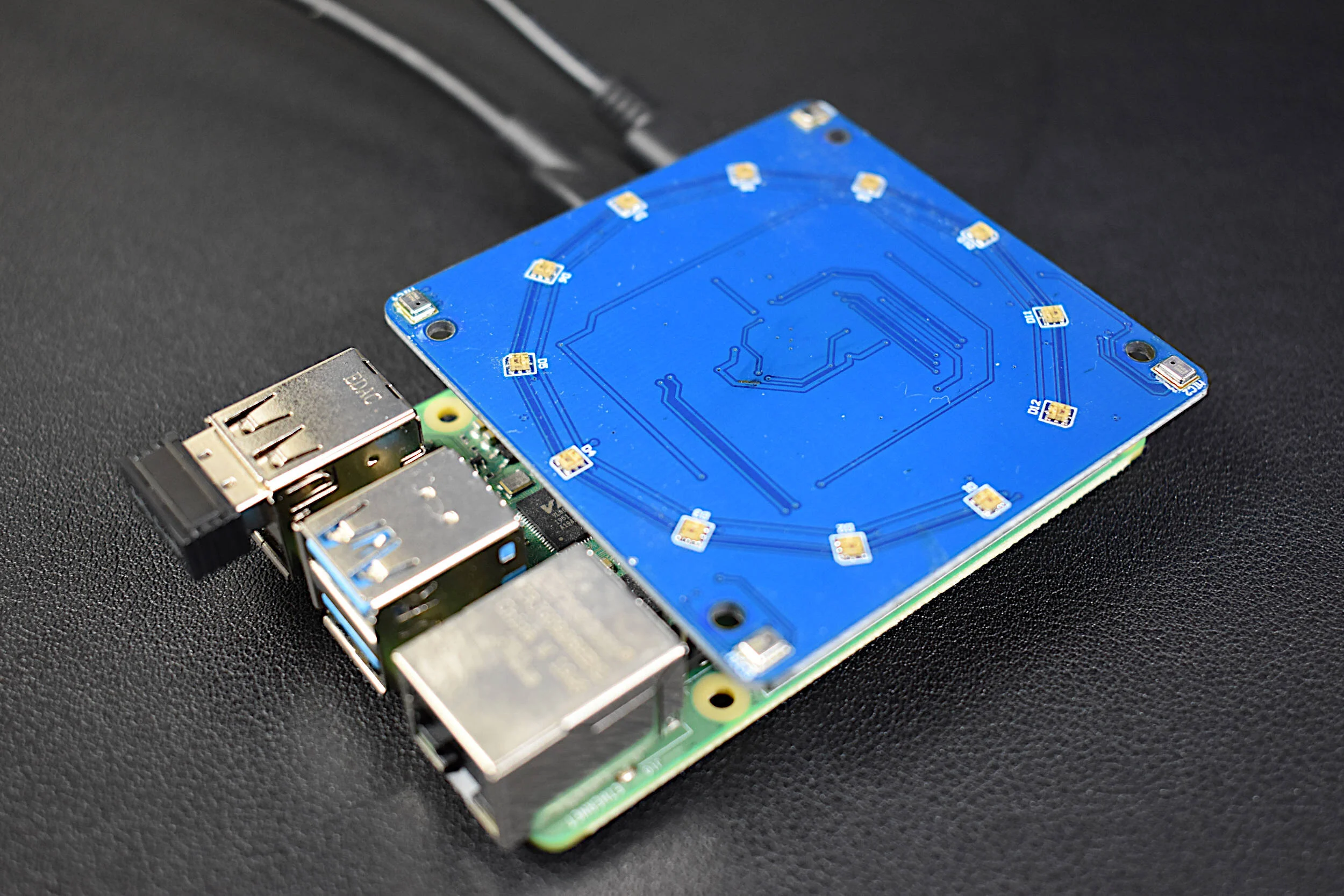

Read MoreThe QuadMic Array is a 4-microphone array based around the AC108 quad-channel analog-to-digital converter (ADC) with Inter-IC Sound (I2S) audio output capable of interfacing with the Raspberry Pi. The QuadMic can be used for applications in voice detection and recognition, acoustic localization, noise control, and other applications in audio and acoustic analysis. The QuadMic will be connected to the header of a Raspberry Pi 4 and used to record simultaneous audio data from all four microphones. Some signal processing routines will be developed as part of an acoustic analysis with the four microphones. Algorithms will be introduced that approximate acoustic source directivity, which can help with understanding and characterizing noise sources, room and spatial geometries, and other aspects of acoustic systems. Python is also used for the analysis. Additionally, visualizations will aid in the understanding of the measurements and subsequent analyses conducts in this tutorial.

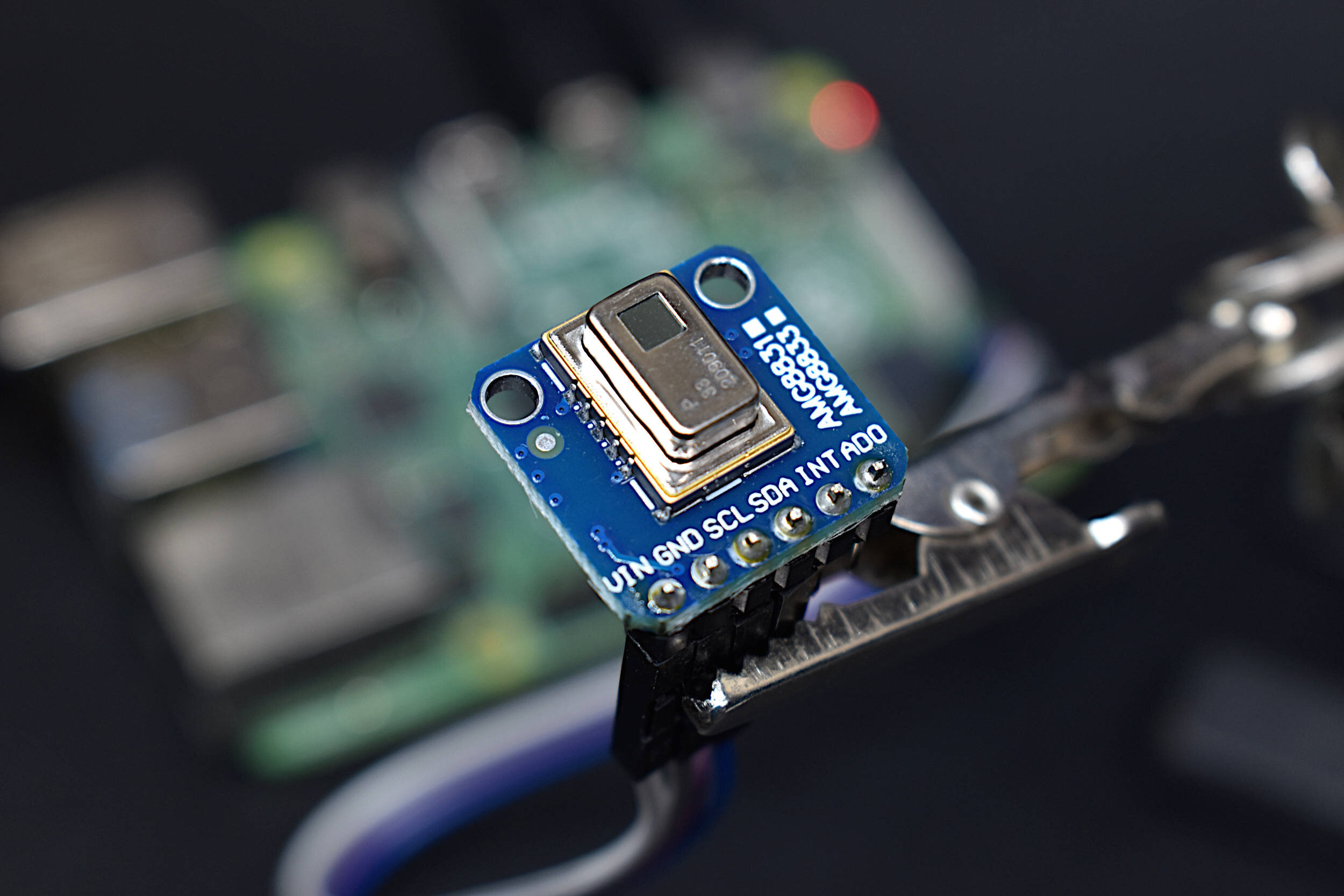

Read MoreThe AMG8833 infrared thermopile array is a 64-pixel (8x8) detector that approximates temperature from radiative bodies. The module is wired to a Raspberry Pi 4 computer and communicates over the I2C bus at 400kHz to send temperature from all 64 pixels at a selectable rate of 1-10 samples per second. The temperature approximation is outputted at a resolution of 0.25°C over a range of 0°C to 80°C. A real-time infrared camera (IR camera) was introduced as a way of monitoring temperature for applications in person counting, heat transfer of electronics, indoor comfort monitoring, industrial non-contact temperature measurement, and other applications where multi-point temperature monitoring may be useful. The approximate error of the sensor over its operable range is 2.5°C, making is particularly useful for applications with larger temperature fluctuations. This tutorial is meant as the first in a series of heat transfer analyses in electronics thermal management using the AMG8833.

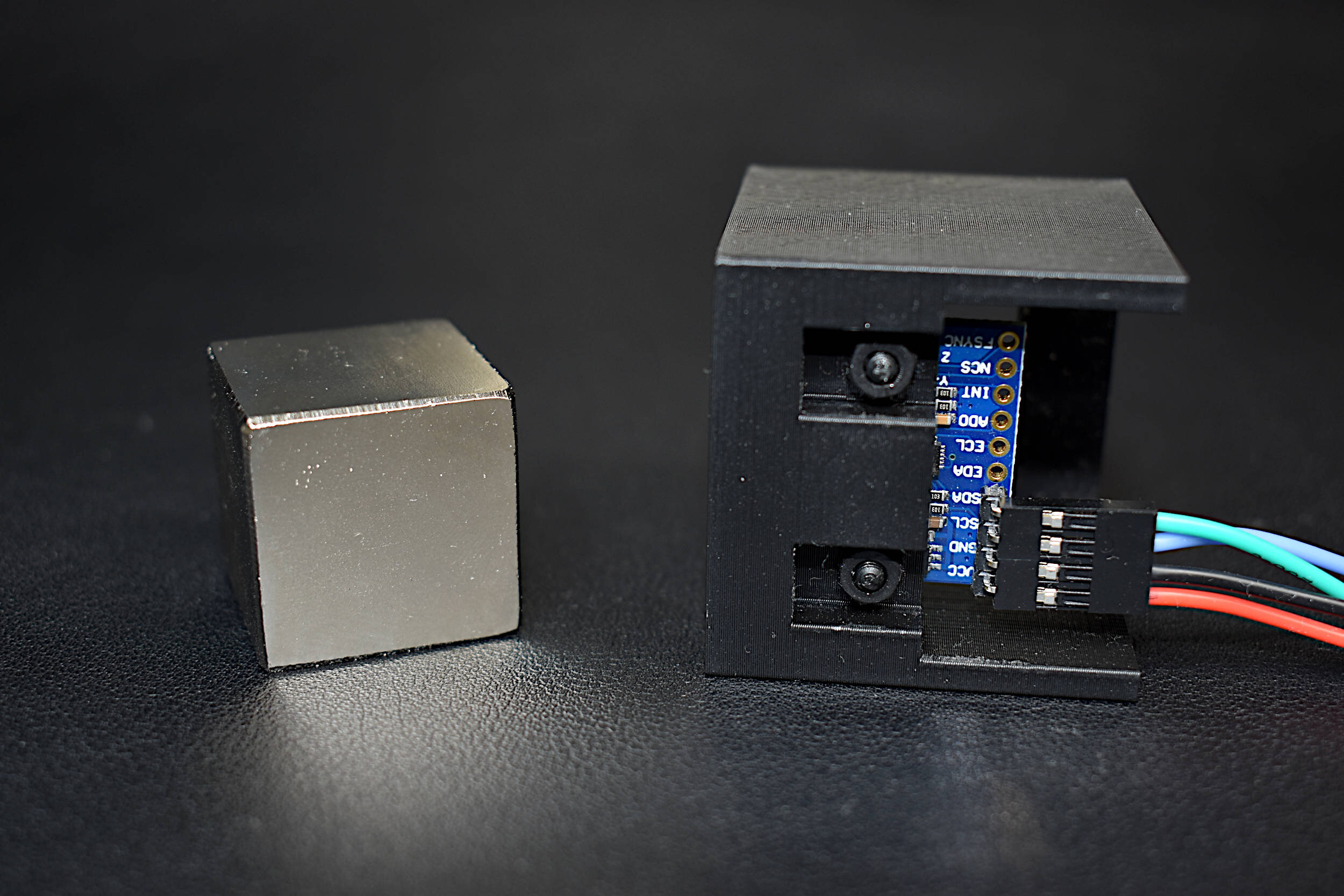

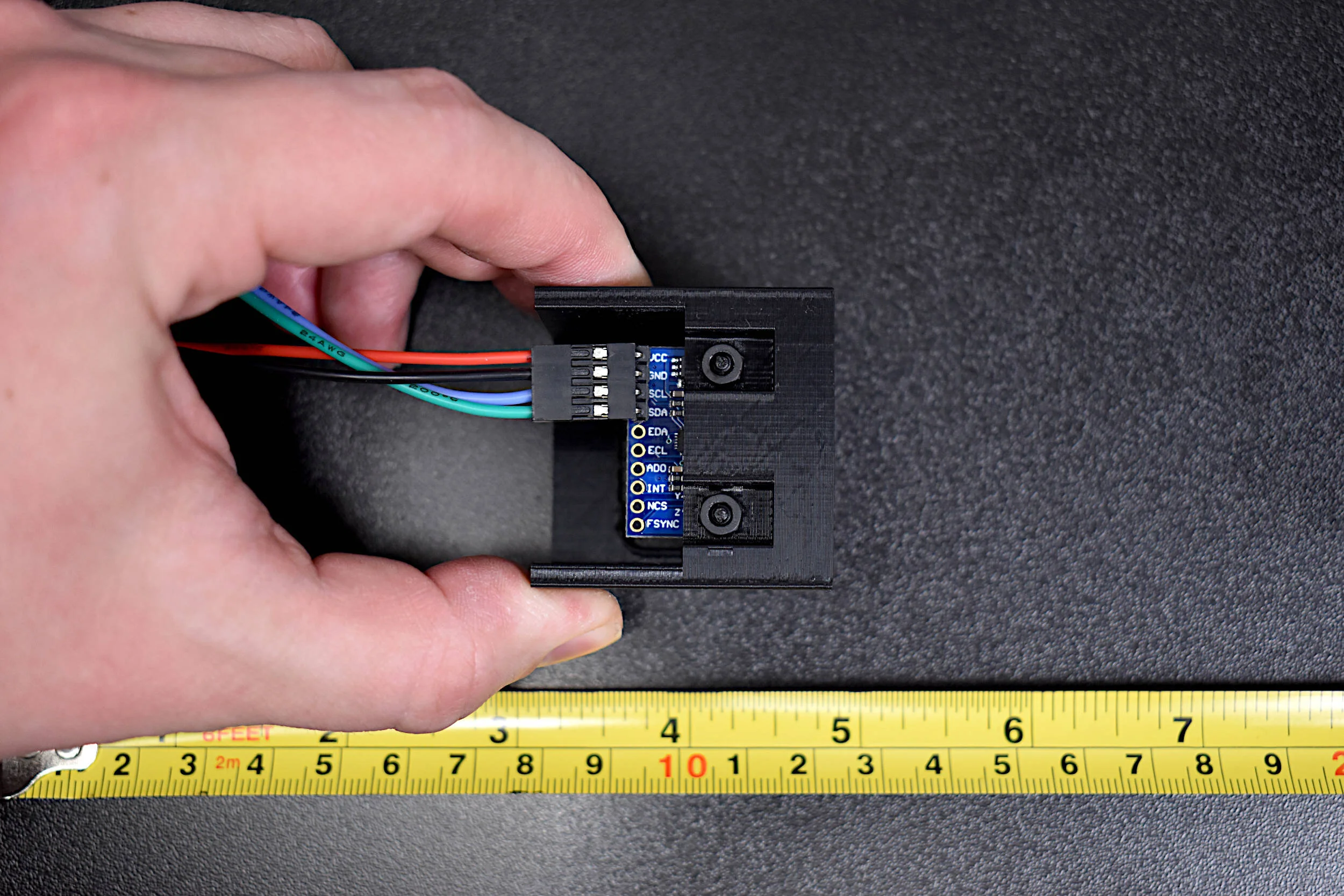

Read MoreIn this tutorial, methods for calibrating a magnetometer aboard the MPU9250 is explored using our Calibration Block. The magnetometer is calibrated by rotating the IMU 360° around each axis and calculating offsets for hard iron effects. Python is again used as the coding language on the Raspberry Pi computer in order to communicate and record data from the IMU via the I2C bus. The second half of this tutorial gives a full calibration routine for the IMU's accelerometer, gyroscope, and magnetometer. The final implementation will allow for moderate (first-order) calibration of the MPU9250 under reasonable conditions, requiring only the calibration block and IMU. Finally, the complete final code will save the coefficients for each sensor for future use in direct applications without the need for constant calibration. The use of the calibration coefficients will allow for improved estimates of orientation, displacement, vibration, and other relevant control and measurement analyses.

Read MoreThis is the second entry into the series entitled "Calibration of an Inertial Measurement Unit (IMU) with Raspberry Pi" where the gyroscope and accelerometer are calibrated using our Calibration Block. Python is used as the coding language on the Raspberry Pi to find the calibration coefficients for the two sensors. Validation methods are also used to integrate the IMU variables to test the calibration of each sensor. The gyroscope shows a fairly accurate response when calibrated and integrated, and found to be within a degree of the actual rotation test. The accelerometer was slightly less accurate, likely due to the double integration required to approximate displacement and the unbalanced table upon which the IMU was calibrated. Filtering methods are also introduced to smooth the accelerometer data for integration. The final sensor, the magnetometer (AK8963), will be calibration in the next iteration of this series.

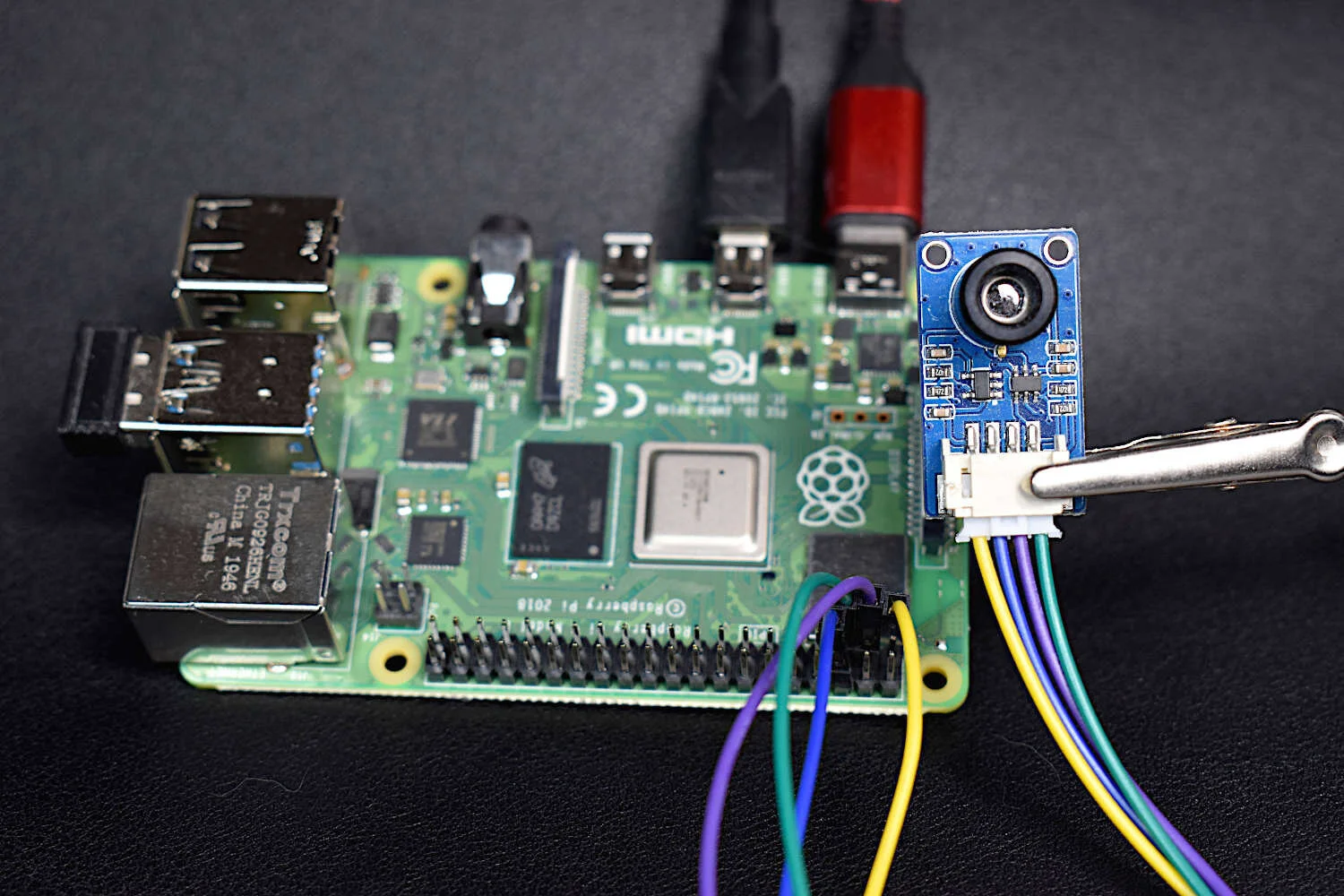

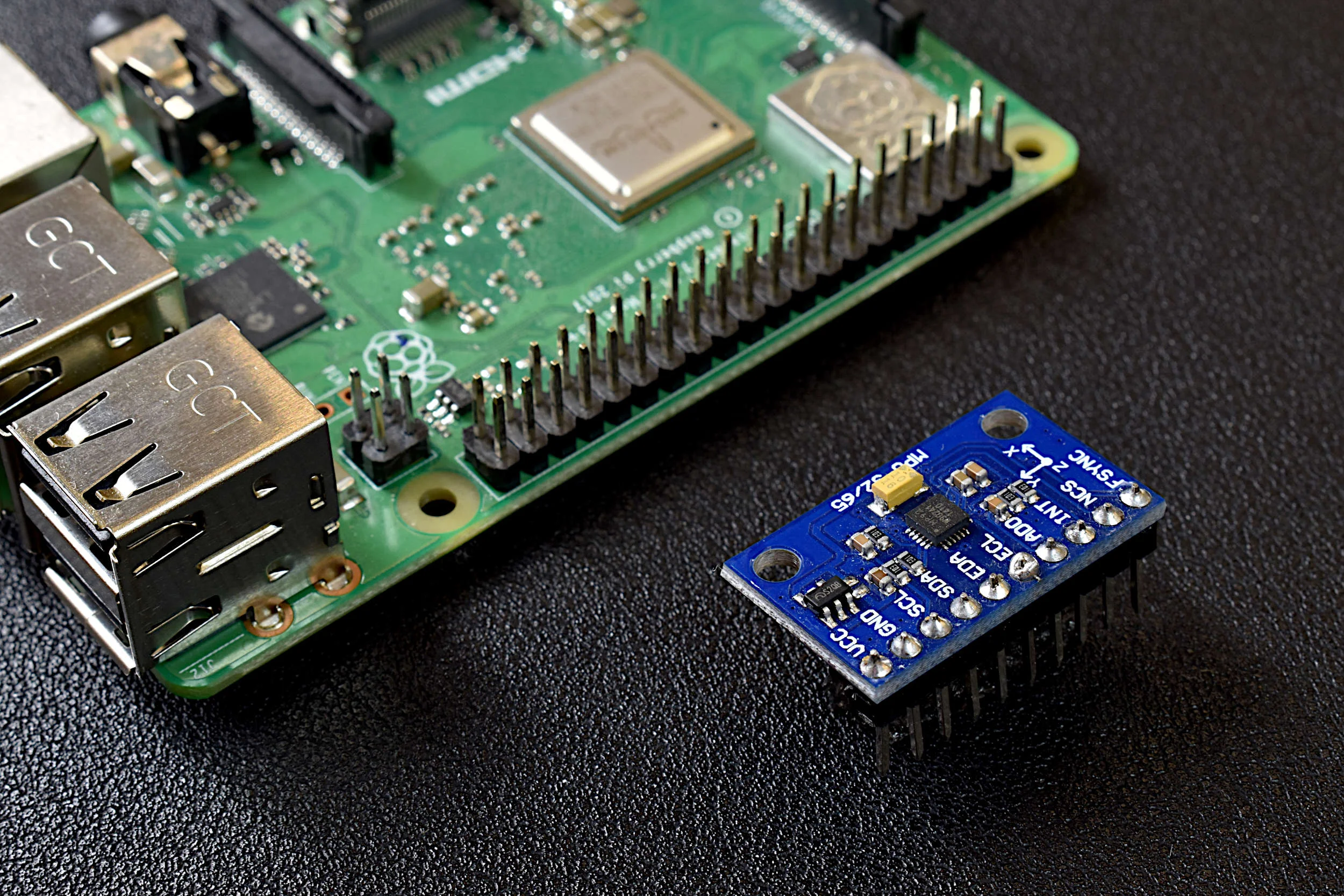

Read MoreInertial measurement units (IMUs) can consist of a single sensor or collection of sensors that capture data meant to measure inertial movements in a given reference frame. Acceleration, speed of rotation, and magnetic field strength are examples of sensors contained in an IMU. IMUs can be found in applications ranging smart devices, medical rehabilitation, general robotics, manufacturing control, aviation and navigation, sports learning, and augmented and virtual reality systems. Inertial measurement units have become increasingly popular as their form factors shrink and computational power increases. The ability to use IMUs for indoor/outdoor tracking, motion detection, force estimation, orientation detection, among others has caused the use and availability of inertial sensors to become nearly widespread in smart phones, smart watches, drones, and other common electronic devices. The internet is full of projects involving accelerometers, gyroscopes, and magnetometers, but few cover the full calibration of all three sensors. In this project, the manual calibration of a nine degree-of-freedom (9-DoF) IMU is explored. A common MPU9250 IMU is attached to a cube to manually find the calibration coefficients of the three sensors contained within the IMU: accelerometer, gyroscope, and magnetometer. The IMU is wired to a Raspberry Pi - which will allow for high-speed data acquisition rates of all nine components of the IMU.

Read MoreThe INMP441 MEMS microphone is used to record audio using a Raspberry Pi board through the inter-IC sound (I2S or I2S) bus. The I2S standard uses three wires to record data, keep track of timing (clock), and determine whether an input/output is in the left channel or right channel. First, the Raspberry Pi (RPi) needs to be prepped for I2S communication by creating/enabling an audio port in the RPi OS system. This audio port will then be used to communicate with MEMS microphones and consequently record stereo audio (one left channel, one right channel). Python iS then used to record the 2-channel audio via the pyaudio Python audio library. Finally, the audio data will be visualized and analyzed in Python with simple digital signal processing methods that include Fast Fourier Transforms (FFTs), noise subtraction, and frequency spectrum peak detection.

Read MoreThermal cameras are similar to standard cameras in that they use light to record images. The most significant distinction is that thermal cameras detect and filter light such that only the infrared region of the electromagnetic spectrum is recorded, not the visible region [read more about infrared cameras here]. Shortly after the discovery of the relationship between radiation and the heat given off by black bodies, infrared detectors were patented as a way to predict temperature via non-contact instrumentation. In recent decades, as integrated circuits shrink in size, infrared detectors have become commonplace in applications of non-destructive testing, medical device technology, and motion detection of heated bodies. The sensor used here is the MLX90640 [datasheet], which is a 768 pixel (24x32) thermal camera. It uses an array of infrared detectors (and likely filters) to detect the radiation given off by objects. Along with a Raspberry Pi computer, the MLX90640 will be used to map and record fairly high-resolution temeperature maps. Using Python, we will be able to push the RPI to its limits by interpolating the MLX90640 to create a 3 frame-per-second (fps) thermal camera at 240x320 pixel resolution.

Read MoreIn this tutorial, an ultrasonic sensor (HC-SR04) will be used in place of a radio emitter; and a plan position indicator will be constructed in Python by recording the angular movements of a servo motor. An Arduino board will both record the ranging data from the ultrasonic sensor while also controlling and outputting the angular position of the servo motor. This will permit the creation of a PPI for visualizing the position of various objects surrounding the radar system.

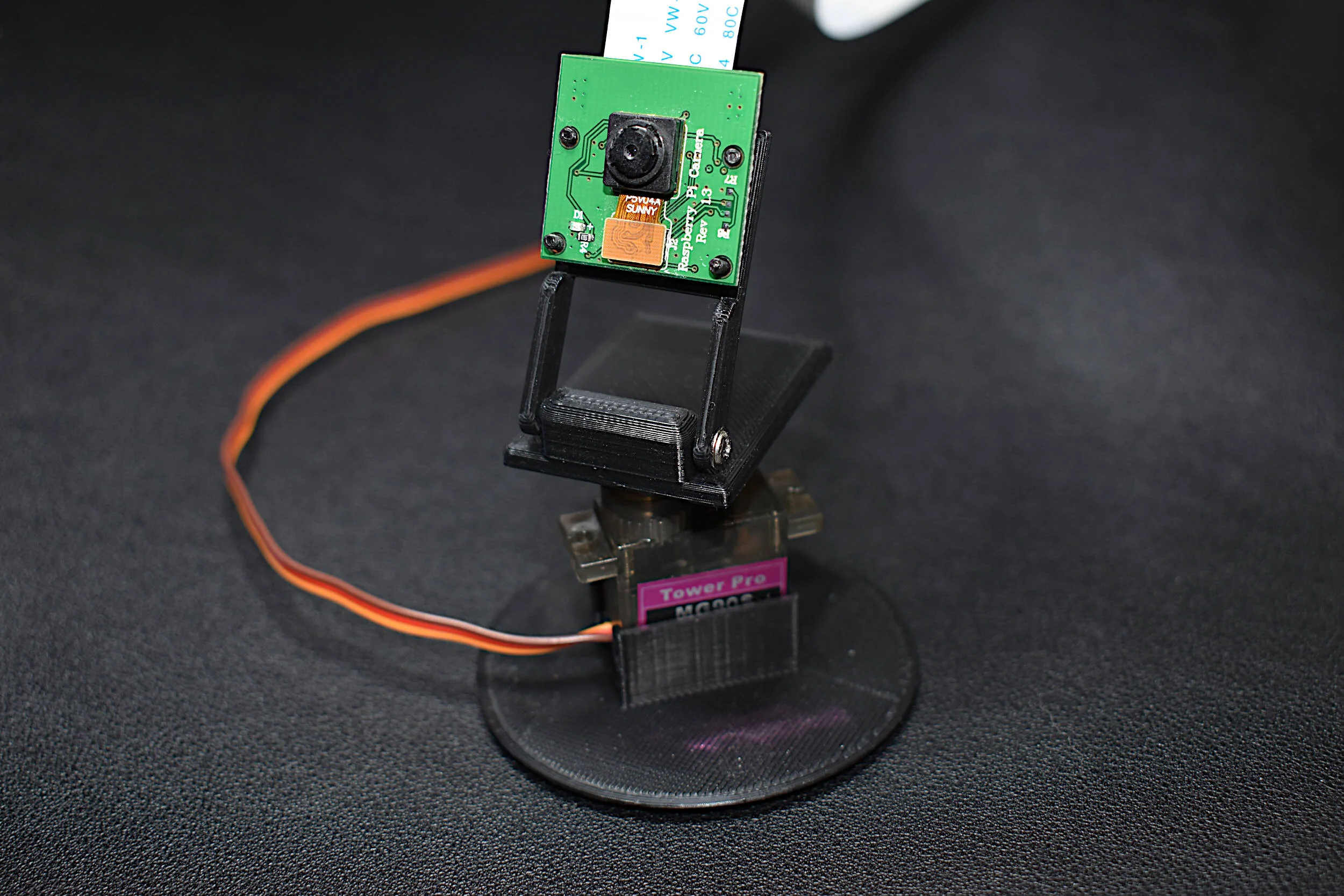

Read MoreIn this tutorial, the RPi is used to demonstrate pulse-width modulation (PWM) and apply it to servo motor control. Then, the servo is used to control the panning of a camera - which is also controlled by the native camera port on the Raspberry Pi. This tutorial is a simple introduction that can be expanded into a full 360° controllable camera project, or a project involving a robotic arm, or any project involving servo motors or PWM-controlled devices.

Read MoreA Raspberry Pi will be used to read the MPU9250 3-axis acceleration, 3-axis angular rotation speed, and 3-axis magnetic flux (MPU9250 product page can be found here). The output and limitations of the MPU9250 will be explored, which will help define the limitations of applications for each sensor. This is only the first entry into the MPU9250 IMU series, where in the breadth of the articles we will apply advanced techniques in Python to analyze each of the 9-axes of the IMU and develop real-world applications for the sensor, which may be useful to engineers interested in vibration analysis, navigation, vehicle control, and many other areas.

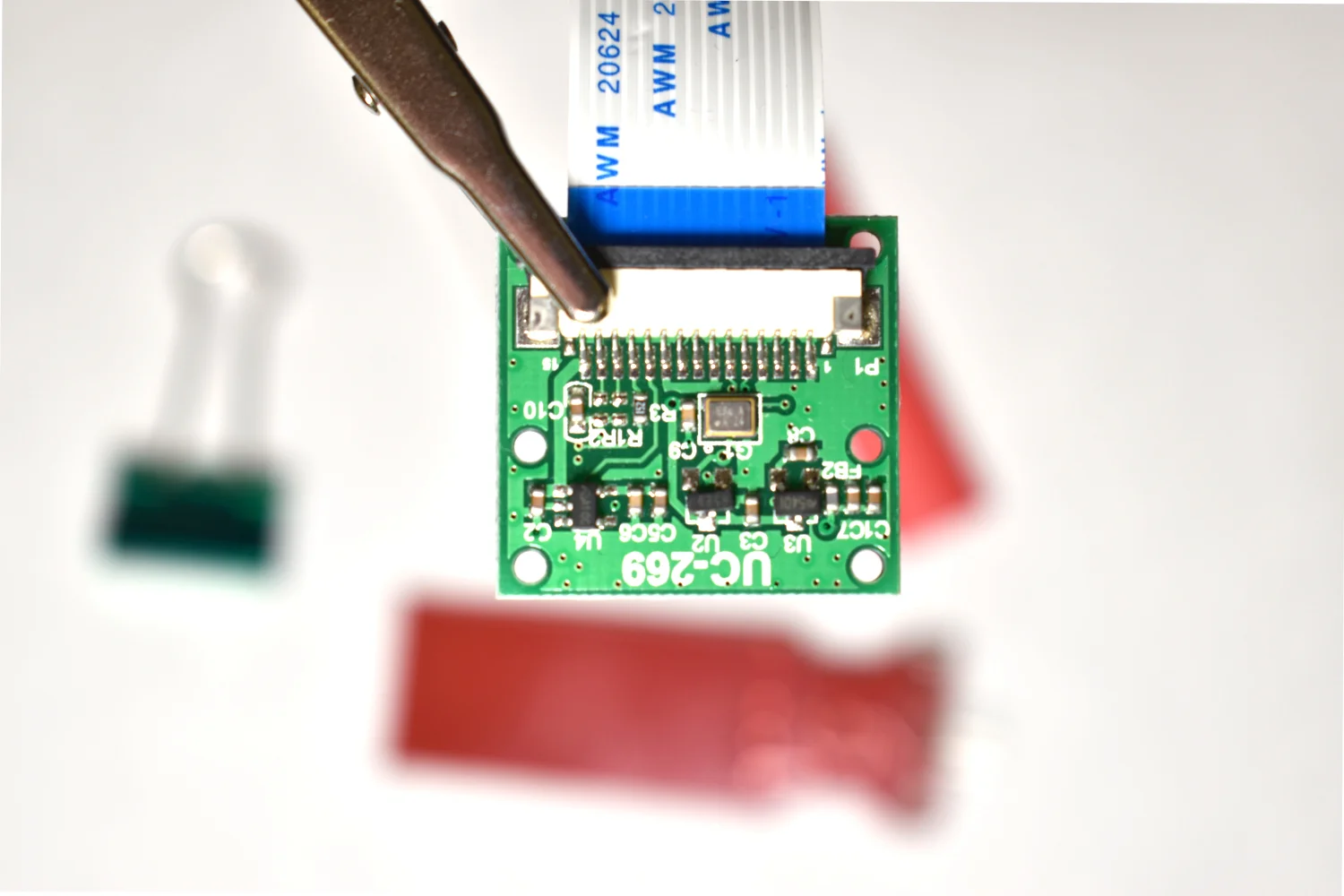

Read MoreThe picamera and edge detection routines will be used to identify individual objects, predict each object’s color, and approximate each object’s orientation (rotation). By the end of the tutorial, the user will be capable of dividing an image into multiple objects, determining the rotation of the object, and drawing a box around the subsequent object.

Read MoreIn this entry, image processing-specific Python toolboxes are explored and applied to object detection to create algorithms that identify multiple objects and approximate their location in the frame using the picamera and Raspberry Pi. The methods used in this tutorial cover edge detection algorithms as well as some simple machine learning algorithms that allow us to identify individual objects in a frame.

Read MoreThe Raspberry Pi has a dedicated camera input port that allows users to record HD video and high-resolution photos. Using Python and specific libraries written for the Pi, users can create tools that take photos and video, and analyze them in real-time or save them for later processing. In this tutorial, I will use the 5MP picamera v1.3 to take photos and analyze them with Python and an Pi Zero W. This creates a self-contained system that could work as an item identification tool, security system, or other image processing application. The goal is to establish the basics of recording video and images onto the Pi, and using Python and statistics to analyze those images.

Read More