Multiple Object Detection with Python and Raspberry Pi

“As an Amazon Associates Program member, clicking on links may result in Maker Portal receiving a small commission that helps support future projects.”

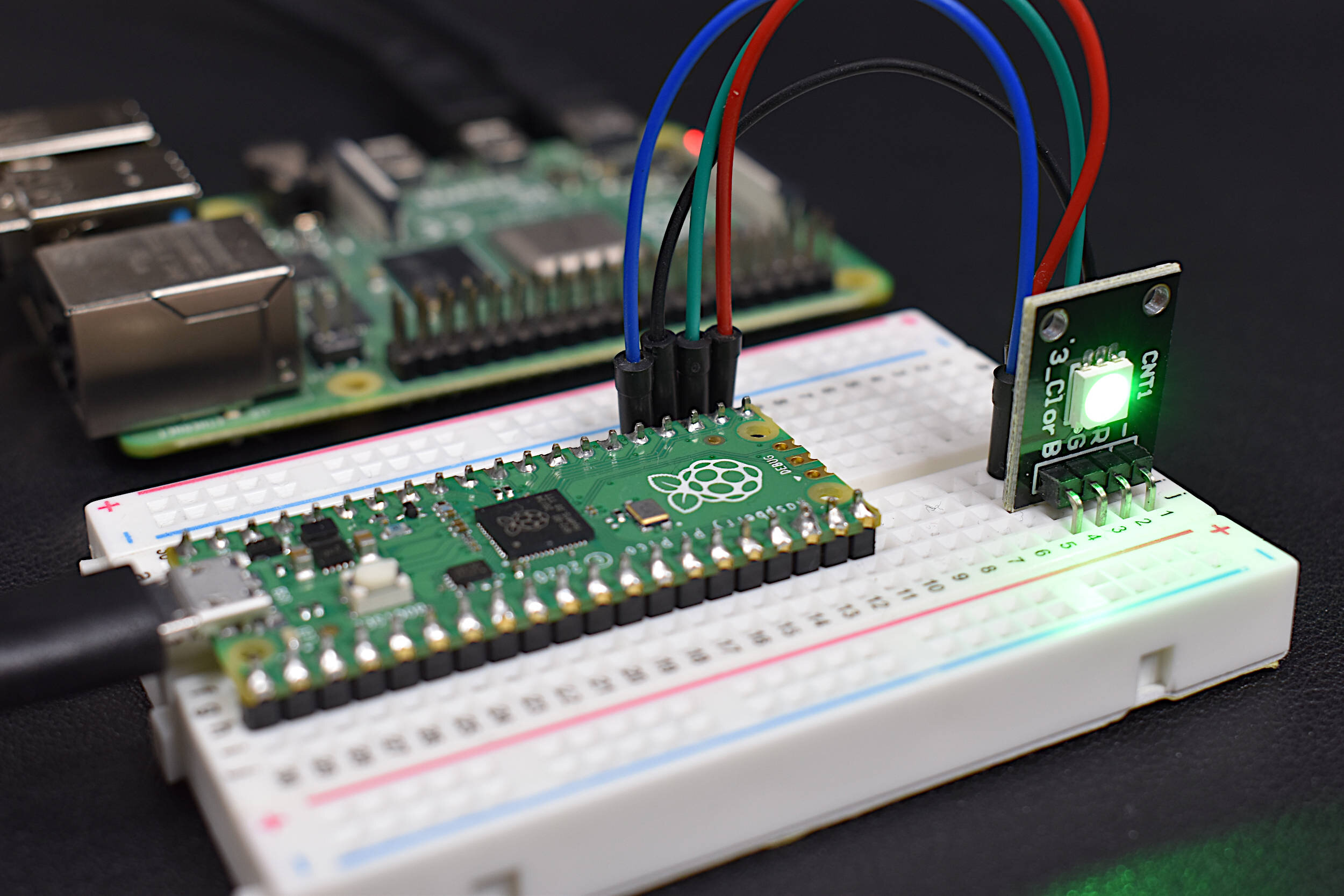

This is the third entry into the Raspberry Pi and Python image processing tutorial series. In parts I and II, the Raspberry Pi’s picamera was introduced along with some edge detection routines. The aforementioned tutorials were the stepping stones needed to understanding the working of the picamera, Python, and identifying individual objects. Click here to explore Part I (picamera introduction and simple image processing) and click here to explore Part II (edge detection using machine learning and clustering algorithms).

In this tutorial, the picamera and edge detection routines introduced in parts I and II will be employed to identify individual objects, predict each object’s color, and approximate each object’s orientation (rotation). By the end of the tutorial, the user will be capable of dividing an image into multiple objects, determining the rotation of the object, and drawing a box around the subsequent object.

Picking up from the final code in Part II of the tutorial series (link to github code here), we can start by analyzing the DBSCAN clustering algorithm provided by the Sklearn toolbox. Thus, using the code below, we can produce the following scatter showing object boundaries in an image:

import time from picamera import PiCamera import scipy.ndimage as scimg import numpy as np import matplotlib.pyplot as plt from sklearn.cluster import DBSCAN # picamera setup h = 640 #largest resolution length cam_res = (int(h),int(0.75*h)) # resizing to picamera's required ratios cam_res = (int(32*np.floor(cam_res[0]/32)),int(16*np.floor(cam_res[1]/16))) cam = PiCamera(resolution=cam_res) # preallocating image variables data = np.empty((cam_res[1],cam_res[0],3),dtype=np.uint8) x,y = np.meshgrid(np.arange(cam_res[0]),np.arange(cam_res[1])) # different edge detection methods cam.capture(data,'rgb') # capture image # Canny method without angle gaus = scimg.fourier_gaussian(data[:,:,0],sigma=0.01) can_x = scimg.prewitt(gaus,axis=0) can_y = scimg.prewitt(gaus,axis=1) can = np.hypot(can_x,can_y) # pulling out object edges fig3,ax3 = plt.subplots(1,2,figsize=(12,4)) ##ax3[0].pcolormesh(x,y,can,cmap='gray') bin_size = 100 # total bins to show percent_cutoff = 0.02 # cutoff once main peak tapers to 1% of max hist_vec = np.histogram(can.ravel(),bins=bin_size) hist_x,hist_y = hist_vec[0],hist_vec[1] for ii in range(np.argmax(hist_x),bin_size): hist_max = hist_y[ii] if hist_x[ii]<percent_cutoff*np.max(hist_x): break # sklearn section for clustering x_cluster = x[can>hist_max] y_cluster = y[can>hist_max] scat_pts = [] for ii,jj in zip(x_cluster,y_cluster): scat_pts.append((ii,jj)) min_samps = 15 leaf_sz = 10 max_dxdy = 25 # clustering analysis for object detection clustering = DBSCAN(eps=max_dxdy,min_samples=min_samps, algorithm='kd_tree', leaf_size=leaf_sz).fit(scat_pts) im_show = ax3[0].imshow(data,origin='lower') # drawing boxes around individual objects for ii in np.unique(clustering.labels_): if ii==-1: continue clus_dat = np.where(clustering.labels_==ii) x_pts = x_cluster[clus_dat] y_pts = y_cluster[clus_dat] ax3[1].plot(x_pts,y_pts,marker='.',linestyle='',label='Object {0:2.0f}'.format(ii)) ax3[1].legend() ax3[1].set_xlim(np.min(x),np.max(x)) ax3[1].set_ylim(np.min(y),np.max(y)) fig3.savefig('dbscan_main_blog.png',dpi=150,facecolor=[252/255,252/255,252/255]) plt.show()

The tools developed in part II of this series, if it’s not obvious in the plot and code above, allows us to now analyze each individual object in the image frame. In the case above, four objects have been identified, and what we want to do next is approximate the rotation of each object, and subsequently find the closest boundaries for drawing boxes around each object. Once the boundaries are approximated (after rotation), we can finally sum up the contributions of each RGB channel and approximate the color of each object.

If we look closely at the clustering variable, we can see how the computation is being largely affected by the variable passed into the scatter points. In the next section, I downscale the object boundaries so that we can more readily approximate object boundaries in real time.

There are many ways to upscale an image, however, for our particular case, we want to ensure that we are using the most efficient methods (for real-time analysis) and also ensuring that the proper object boundaries are being preserved (within some degree of error). Therefore, the methods used can be altered based on specific uses, particularly with respect to object sizes in the image frame. The ndimage toolbox in Python facilitates image scaling quite nicely, with a function called ‘zoom.’

The ‘ndimage’ zoom function uses spline interpolation based on the request order of zoom. This means, for an order less than 1, we can scale our image to improve computational performance. The amount of scaling depends on the object size in reference to the image frame, however, the impacts are significant to any degree (non-linear because of 2-D scaling). Below is a simple implementation and the resulting scaled nearest neighbor analysis. Specifically for nearest neighbor learning, scaling can reduce the computations by orders of magnitude. In my particular case, the machine learning DBSCAN went from 1.95s to 0.07s, a reduction of 96%! Depending on the particular application, this may be acceptable or highly inaccurate - so test on each potential object size and shape and frame of interest first.

import time from picamera import PiCamera import scipy.ndimage as scimg import numpy as np import matplotlib.pyplot as plt from sklearn.cluster import DBSCAN # picamera setup h = 640 #largest resolution length cam_res = (int(h),int(0.75*h)) # resizing to picamera's required ratios cam_res = (int(32*np.floor(cam_res[0]/32)),int(16*np.floor(cam_res[1]/16))) cam = PiCamera(resolution=cam_res) # preallocating image variables data = np.empty((cam_res[1],cam_res[0],3),dtype=np.uint8) # different edge detection methods cam.capture(data,'rgb') # capture image # Canny method without angle fig3,ax3 = plt.subplots(2,1,figsize=(10,7)) for qq in range(0,2): t1 = time.time() if qq==0: min_samps = 15 leaf_sz = 15 max_dxdy = 25 gaus = scimg.fourier_gaussian(np.mean(data,2),sigma=0.01) x,y = np.meshgrid(np.arange(np.shape(data)[1]),np.arange(np.shape(data)[0])) if qq==1: scale_val = 0.25 min_samps = 15 leaf_sz = 10 max_dxdy = 25 gaus = scimg.fourier_gaussian(scimg.zoom(np.mean(data,2),scale_val),sigma=0.01) x,y = np.meshgrid(np.arange(0,np.shape(data)[1],1/scale_val), np.arange(0,np.shape(data)[0],1/scale_val)) can_x = scimg.prewitt(gaus,axis=0) can_y = scimg.prewitt(gaus,axis=1) can = np.hypot(can_x,can_y) # pulling out object edges bin_size = 100 # total bins to show percent_cutoff = 0.018 # cutoff once main peak tapers to 1% of max hist_vec = np.histogram(can.ravel(),bins=bin_size) hist_x,hist_y = hist_vec[0],hist_vec[1] for ii in range(np.argmax(hist_x),bin_size): hist_max = hist_y[ii] if hist_x[ii]<percent_cutoff*np.max(hist_x): break # sklearn section for clustering x_cluster = x[can>hist_max] y_cluster = y[can>hist_max] x_scaled = np.where(can>hist_max,x,0) y_scaled = np.where(can>hist_max,y,0) scat_pts = [] for ii,jj in zip(x_cluster,y_cluster): scat_pts.append((ii,jj)) # clustering analysis for object detection t1 = time.time() clustering = DBSCAN(eps=max_dxdy,min_samples=min_samps, algorithm='ball_tree', leaf_size=leaf_sz).fit(scat_pts) nn_time = time.time()-t1 for ii in np.unique(clustering.labels_): if ii==-1: continue clus_dat = np.where(clustering.labels_==ii) x_pts = x_cluster[clus_dat] y_pts = y_cluster[clus_dat] ax3[qq].plot(x_pts,y_pts,marker='.',linestyle='') ax3[qq].set_xlim(np.min(x),np.max(x)) ax3[qq].set_ylim(np.min(y),np.max(y)) if qq==0: ax3[qq].annotate('Image Size: 640x480, $t = ${0:2.2f}s'.format(nn_time), (np.min(x)+3,np.max(y)-15),xytext = (0,0),textcoords='offset points', bbox = dict(fc='0.9',boxstyle='round')) if qq==1: ax3[qq].annotate('Image Size: 120x160, $t = ${0:2.2f}s'.format(nn_time), (np.min(x)+3,np.max(y)-15),xytext = (0,0),textcoords='offset points', bbox = dict(fc='0.9',boxstyle='round')) plt.show()

There are several side effects that are important to note when scaling:

Object boundaries will blur more due to spline interpolation between pixels

Alterations may need to be made in DBSCAN parameters if lines become too close between objects

The histogram percent cutoff may need to be increased in order to catch more points between blurred segments

Now that we have an efficient tool for marking object boundaries, we can begin to approximate object orientations for accurately drawing boundaries around each object. The method for determining the rotation of an object lies in the covariance of the scatter points and the eigenvector decomposition of the covariance. We can take the eigenvector, compute the arctan of the norm, and get an approximate of the orientation ‘spread’ of the scatter points. A great resource for understanding scatter covariance, orientation, and eigenvectors, see the following tutorial “A geometric interpretation of the covariance matrix.”

2-D scatter covariance can be computed using the following matrix:

Now, if we use a single object and rotate it with respect to the image frame, we can see the importance of the covariance:

We can see a few things in the covariance results:

Anti-symmetry and symmetry in the scatter

Geometric analog to the covariance vector

Relationship to rotation matrix

As a result of the two above, we can use the inverse of the covariance matrix to approximate the angle of rotation of the object scatter. Assuming the data matrix, D, is the object orientation with no rotation, we can assume the rotated object data matrix, D’, can be unrotated using the following equation:

This method will also be used to find the width and height of the objects for drawing boxes around object boundaries. The general inverse rotation multiplication can be done as shown in the code below:

# calculate eigenvectors from covariance between x,y scatter evals,evecs = np.linalg.eigh(np.cov(x_pts,y_pts)) angle = np.arctan(evecs[0][1]/evecs[0][0]) # calculate angle using arctan rot_vec = np.matmul(evecs.T,[x_pts,y_pts]) # multiply by inverse of cov matrix

In the next section, I demonstrate how to draw boxes around the objects using the width and height of the rotated objects, then re-rotating the boxes to fit the original images.

Python also has a toolbox (patches.Rectangle) that allows for drawing of shapes like ellipses and rectangles. Two toolboxes are needed to start drawing object boundaries:

from matplotlib.collections import PatchCollection from matplotlib.patches import Rectangle

The basic usage for drawing a rectangle is as follows:

Draw the rectangle by identifying the left corner coordinates, angle of rotation, and width and height of the object

Attach the rectangle to a patch collection variable

Add the patch collection variable to the plot axis

The basic process is show below as an example:

import numpy as np import matplotlib.pyplot as plt from matplotlib.collections import PatchCollection from matplotlib.patches import Rectangle plt.style.use('ggplot') fig,ax = plt.subplots(1,1,figsize=(10,6)) rot_angle = 30 rect_width = 100 rect_height = 50 rect_origin = (25,55) obj_rect = Rectangle(rect_origin,rect_width,rect_height,angle=rot_angle) pc = PatchCollection([obj_rect],facecolor='',edgecolor='r') ax.add_collection(pc) obj_bounds = obj_rect.get_window_extent().extents ax.set_xlim(0,obj_bounds[2]) ax.set_ylim(0,obj_bounds[3]+50) plt.show()

Now, if we want to draw the rectangles around the objects, we go back to the rotation, calculate the width and height of objects, then input the left corner coordinates, width, height, and angle of rotation. This is all implemented below in code and in the plot that follows:

import time from picamera import PiCamera import scipy.ndimage as scimg import numpy as np import matplotlib.pyplot as plt from matplotlib.collections import PatchCollection from matplotlib.patches import Rectangle from sklearn.cluster import DBSCAN # picamera setup h = 640 #largest resolution length cam_res = (int(h),int(0.75*h)) # resizing to picamera's required ratios cam_res = (int(32*np.floor(cam_res[0]/32)),int(16*np.floor(cam_res[1]/16))) cam = PiCamera(resolution=cam_res) # preallocating image variables data = np.empty((cam_res[1],cam_res[0],3),dtype=np.uint8) # different edge detection methods fig,ax = plt.subplots(2,1,figsize=(10,6)) t1 = time.time() cam.capture(data,'rgb') # capture image fig2,ax2 = plt.subplots(1,1,figsize=(12,8)) ax2.imshow(data) scale_val = 0.25 min_samps = 20 leaf_sz = 15 max_dxdy = 35 gaus = scimg.fourier_gaussian(scimg.zoom(np.mean(data,2),scale_val),sigma=0.01) x,y = np.meshgrid(np.arange(0,np.shape(data)[1],1/scale_val), np.arange(0,np.shape(data)[0],1/scale_val)) # Canny method without angle can_x = scimg.prewitt(gaus,axis=0) can_y = scimg.prewitt(gaus,axis=1) can = np.hypot(can_x,can_y) ax[0].pcolormesh(x,y,gaus) # pulling out object edges bin_size = 100 # total bins to show percent_cutoff = 0.018 # cutoff once main peak tapers to 1% of max hist_vec = np.histogram(can.ravel(),bins=bin_size) hist_x,hist_y = hist_vec[0],hist_vec[1] for ii in range(np.argmax(hist_x),bin_size): hist_max = hist_y[ii] if hist_x[ii]<percent_cutoff*np.max(hist_x): break # sklearn section for clustering x_cluster = x[can>hist_max] y_cluster = y[can>hist_max] x_scaled = np.where(can>hist_max,x,0) y_scaled = np.where(can>hist_max,y,0) scat_pts = [] for ii,jj in zip(x_cluster,y_cluster): scat_pts.append((ii,jj)) # clustering analysis for object detection clustering = DBSCAN(eps=max_dxdy,min_samples=min_samps, algorithm='ball_tree', leaf_size=leaf_sz).fit(scat_pts) nn_time = time.time()-t1 # looping through each individual object for ii in np.unique(clustering.labels_): if ii==-1: continue clus_dat = np.where(clustering.labels_==ii) x_pts = x_cluster[clus_dat] y_pts = y_cluster[clus_dat] cent_mass = (np.mean(x_pts),np.mean(y_pts)) if cent_mass[0]<np.min(x)+10 or cent_mass[0]>np.max(x)-10 or\ cent_mass[1]<np.min(y)+10 or cent_mass[1]>np.max(y)-10: continue ax[1].plot(x_pts,y_pts,marker='.',linestyle='', label='Unrotated Scatter') # rotation algorithm evals,evecs = np.linalg.eigh(np.cov(x_pts,y_pts)) angle = np.arctan(evecs[0][1]/evecs[0][0]) print(str((angle/np.pi)*180)) rot_vec = np.matmul(evecs.T,[x_pts,y_pts]) # rectangle algorithms if angle<0: rect_origin = (np.matmul(evecs,[np.min(rot_vec[0]),np.max(rot_vec[1])])) else: rect_origin = (np.matmul(evecs,[np.max(rot_vec[0]),np.min(rot_vec[1])])) rect_width = np.max(rot_vec[0])-np.min(rot_vec[0]) rect_height = np.max(rot_vec[1])-np.min(rot_vec[1]) obj_rect = Rectangle(rect_origin,rect_width,rect_height,angle=(angle/np.pi)*180) pc = PatchCollection([obj_rect],facecolor='',edgecolor='r',linewidth=2) ax2.add_collection(pc) ax2.annotate('{0:2.0f}$^\circ$ Rotation'.format((angle/np.pi)*180), xy=(rect_origin), xytext=(0,0),textcoords='offset points', bbox=dict(fc='white')) ax[1].set_xlim(np.min(x),np.max(x)) ax[1].set_ylim(np.min(y),np.max(y)) print('total time: {0:2.1f}'.format(time.time()-t1)) fig2.savefig('rectangles_over_real_image.png',dpi=200,facecolor=[252/255,252/255,252/255]) plt.show()

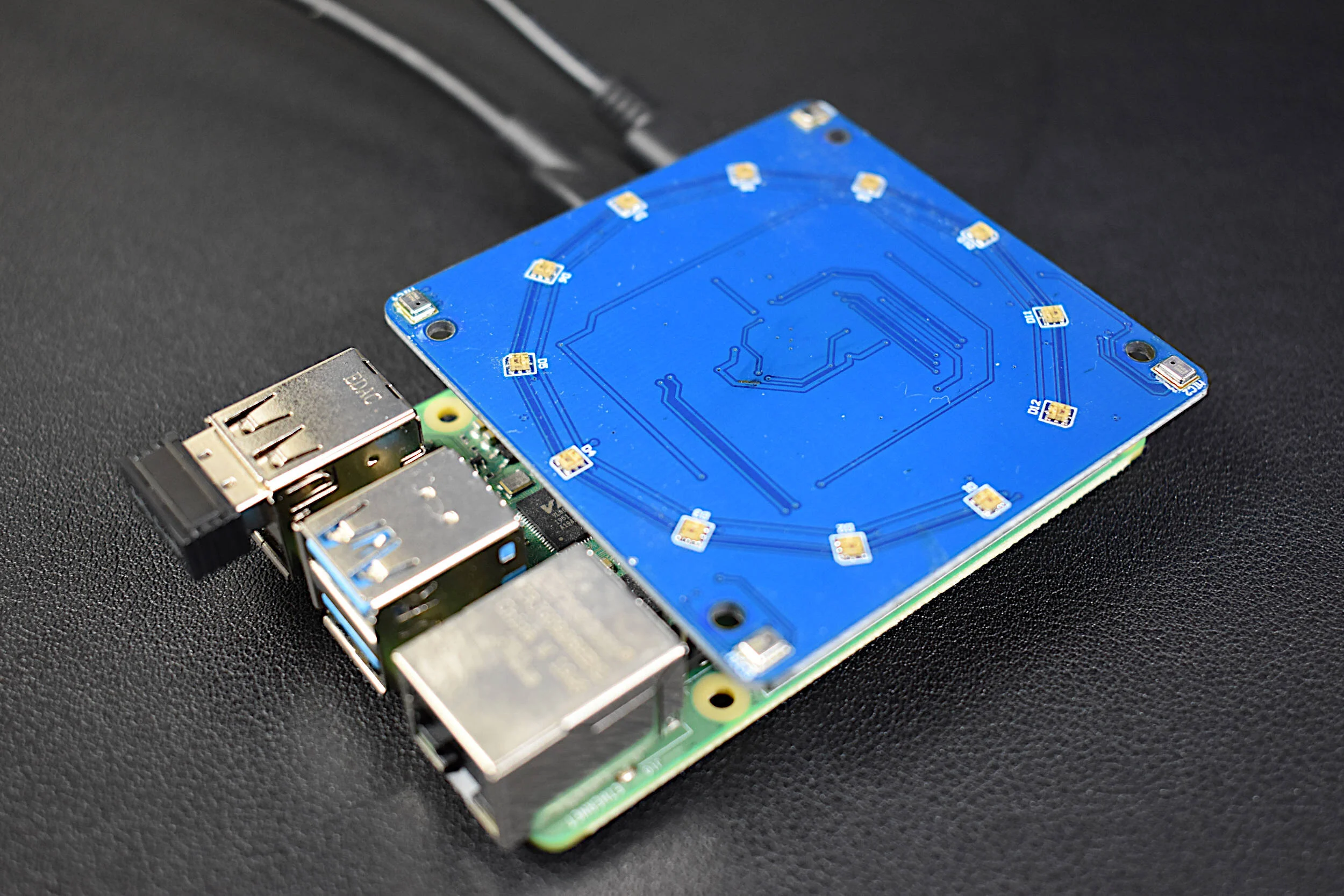

The routine can also be applied to images not taken by the Raspberry Pi picamera:

In the third installment of the Python and Raspberry Pi image processing tutorial series I explored image scaling, rotation, and establishing object boundaries. First, I introduced the zoom function for images in order to improve the processing speed required for object identification. Then, object orientation and the idea of scatter and covariance was explored with the intention of quantifying the rotation of each object. Lastly, the object orientation was utilized in order to draw boxes around each object, resulting in the ability to explore each individual object. There are many uses to this type of tool, specifically with image analysis, facial recognition, machine vision, modern applications in robotics, and so much more. In the future, computer vision will be widespread, and many of the tools discussed in this series will be utilized to a higher and more efficient degree to enable more computer interaction with similar experiences observed by humans.

In the next and (likely) final entry, I will explore individual object recognition and real-time image analysis utilizing many of the tools introduced thus far.

Stay Connected

See More in Image Processing and Raspberry Pi: