Image Processing Object Detection with Raspberry Pi and Python

“As an Amazon Associates Program member, clicking on links may result in Maker Portal receiving a small commission that helps support future projects.”

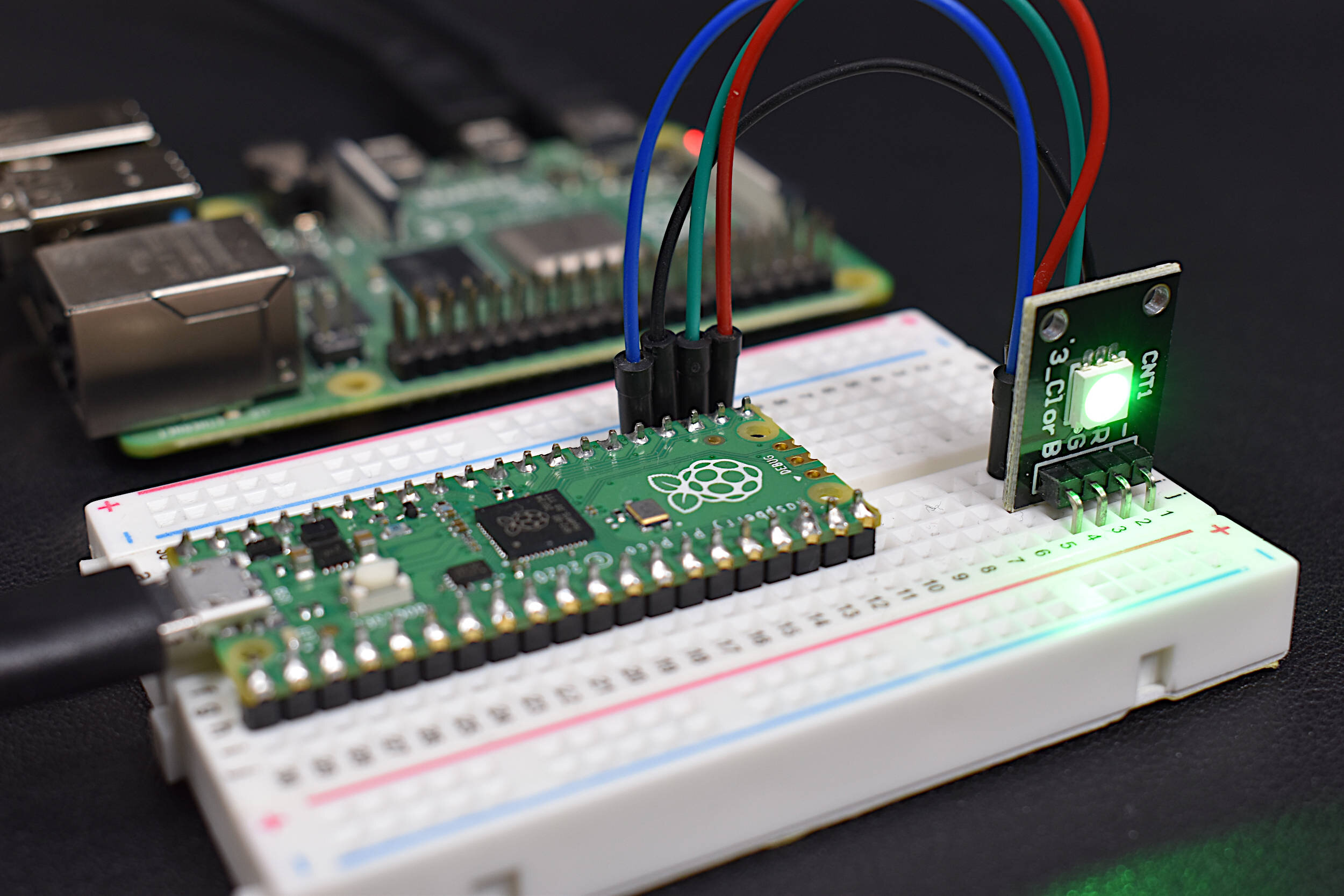

This is the second entry into the Raspberry Pi and Python image processing tutorial series. In part I, the Raspberry Pi’s picamera was introduced along with its respective Python toolbox. Simple image manipulation and color recognition were explored using the picamera and Python’s numerical toolbox (Numpy). Click here to explore Part I. ** in this tutorial, I migrated to the Rapsberry Pi 3B+ for more processing power to decrease computation time. Originally, I started with the Raspberry Pi Zero W, but computation requirements were slightly below what I needed.

In this entry, image processing-specific Python toolboxes are explored and applied to object detection to create algorithms that identify multiple objects and approximate their location in the frame using the picamera and Raspberry Pi. The methods used in this tutorial cover edge detection algorithms as well as some simple machine learning algorithms that allow us to identify individual objects in a frame.

Python’s ‘SciPy’ toolbox will be used for edge detection in images, which will help us determine boundaries of multiple objects present in a specific image. In the Raspberry Pi terminal, SciPy can be downloaded using the following method:

pi@raspberrypi:~ $ sudo apt-get install python3-scipy

The multidimensional imaging toolbox, called ‘ndimage’ comes with SciPy and allows users to manipulate images using a wide array of algorithms. The full list of functions can be found on the ndimage reference guide here. The ndimage toolbox is incredibly powerful and efficient when dealing with image manipulation, specifically with regard to edge detection and spectral methods.

As an example of ndimage functionality, several of the most common methods for image manipulation can be tested to detect edges that define objects. We can therefore, use the ndimage toolbox to test these types of edge detection algorithms and see which best suits our needs. Below is the example code for four common edge detection algorithms, where each uses different derivative functions and convolution of different forms. The four methods are:

Gaussian Gradient

Laplacian of Gaussian

Canny Method

Sobel Method

We can see the weaknesses and strengths of each below in the four-panel plot.

import time from picamera import PiCamera import scipy.ndimage as scimg import numpy as np import matplotlib.pyplot as plt # picamera setup h = 640 #largest resolution length cam_res = (int(h),int(0.75*h)) # resizing to picamera's required ratios cam_res = (int(32*np.floor(cam_res[0]/32)),int(16*np.floor(cam_res[1]/16))) cam = PiCamera(resolution=cam_res) # preallocating image variables data = np.empty((cam_res[1],cam_res[0],3),dtype=np.uint8) x,y = np.meshgrid(np.arange(cam_res[0]),np.arange(cam_res[1])) # different edge detection methods cam.capture(data,'rgb') # capture image # diff of gaussians t0 = time.time() grad_xy = scimg.gaussian_gradient_magnitude(data[:,:,0],sigma=1.5) ##grad_xy = np.mean(grad_xy,2) t_grad_xy = time.time()-t0 # laplacian of gaussian t0 = time.time() lap = scimg.gaussian_laplace(data[:,:,0],sigma=0.7) t_lap = time.time()-t0 # Canny method without angle t0 = time.time() gaus = scimg.fourier_gaussian(data[:,:,0],sigma=0.05) can_x = scimg.prewitt(gaus,axis=0) can_y = scimg.prewitt(gaus,axis=1) can = np.hypot(can_x,can_y) ##can = np.mean(can,2) t_can = time.time()-t0 # Sobel method t0 = time.time() sob_x = scimg.sobel(data[:,:,0],axis=0) sob_y = scimg.sobel(data[:,:,0],axis=1) sob = np.hypot(sob_x,sob_y) ##sob = np.mean(sob,2) t_sob = time.time()-t0 # plotting routines and labeling fig,ax = plt.subplots(2,2,figsize=(12,6)) ax[0,0].pcolormesh(x,y,grad_xy,cmap='gray') ax[0,0].set_title(r'Gaussian Gradient [$\sigma = 1.5$] (Computation Time: {0:2.2f}s)'.format(t_grad_xy)) ax[0,1].pcolormesh(x,y,lap,cmap='gray') ax[0,1].set_title(r'Laplacian of Gaussian [$\sigma = 0.7$] (Computation Time: {0:2.2f}s)'.format(t_lap)) ax[1,0].pcolormesh(x,y,can,cmap='gray') ax[1,0].set_title(r'Canny [$\sigma = 0.05$] (Computation Time: {0:2.2f}s)'.format(t_can)) ax[1,1].pcolormesh(x,y,sob,cmap='gray') ax[1,1].set_title('Sobel (Computation Time: {0:2.2f}s)'.format(t_sob)) fig.tight_layout() fig.savefig('edge_plots.png',dpi=150,facecolor=[252/255,252/255,252/255]) #analyzing histograms fig2,ax2 = plt.subplots(2,2,figsize=(12,6)) ax2[0,0].hist(grad_xy.ravel(),bins=100) ax2[0,1].hist(lap.ravel(),bins=100) ax2[1,0].hist(can.ravel(),bins=100) ax2[1,1].hist(sob.ravel(),bins=100) # pulling out object edges fig3,ax3 = plt.subplots(3,1,figsize=(12,6)) ax3[0].pcolormesh(x,y,can) bin_size = 100 hist_vec = ax3[1].hist(can.ravel(),bins=bin_size) hist_x,hist_y = hist_vec[0],hist_vec[1] for ii in range(np.argmax(hist_x),bin_size): hist_max = hist_y[ii] if hist_x[ii]<0.01*np.max(hist_x): break ax3[2].plot(x[can>hist_max],y[can>hist_max],marker='.',linestyle='') plt.show()

The original image is also shown below, for reference.

Original Image Analyzed Above

We can see that each method does fairly well when pulling out the edges of each object, with some being more precise, some noisier, and some missing some edges. As with many algorithms, the most accurate for edge detection of the four is the Canny method, which also happens to require the most computational time. However, it is so accurate that I will be using it as the method for detecting objects. The edge plots are also only using one of the colors in the image (red), so using all three (RGB) will also help lower the error when detecting objects.

The histogram is useful in image processing, because there may be multiple artifacts that do not follow standard single-peak Gaussian distributions. We will see that the histogram distributions not only can be multi-peak, but also asymmetric. The plot below shows the distribution of the image above, with 100 bins

One method for approximating object edges is finding the point where the values are above the noise and at the point where enough points are available to delineate shapes. Above, I have delineated the 1% dropoff point, where we are approximately above the noise floor but also including enough points to recreate the object edges. The scatter points above the 1% dropoff are shown below:

The code to approximate the scatter points above is also included below.

import time from picamera import PiCamera import scipy.ndimage as scimg import numpy as np import matplotlib.pyplot as plt plt.style.use('ggplot') # picamera setup h = 640 #largest resolution length cam_res = (int(h),int(0.75*h)) # resizing to picamera's required ratios cam_res = (int(32*np.floor(cam_res[0]/32)),int(16*np.floor(cam_res[1]/16))) cam = PiCamera(resolution=cam_res) # preallocating image variables data = np.empty((cam_res[1],cam_res[0],3),dtype=np.uint8) x,y = np.meshgrid(np.arange(cam_res[0]),np.arange(cam_res[1])) # different edge detection methods cam.capture(data,'rgb') # capture image # Canny method without angle t0 = time.time() gaus = scimg.fourier_gaussian(data[:,:,0],sigma=0.01) can_x = scimg.prewitt(gaus,axis=0) can_y = scimg.prewitt(gaus,axis=1) can = np.hypot(can_x,can_y) t_can = time.time()-t0 # pulling out object edges fig3,ax3 = plt.subplots(2,1,figsize=(10,7)) ax3[0].pcolormesh(x,y,can,cmap='gray') bin_size = 100 # total bins to show percent_cutoff = 0.01 # cutoff once main peak tapers to 1% of max hist_vec = np.histogram(can.ravel(),bins=bin_size) hist_x,hist_y = hist_vec[0],hist_vec[1] for ii in range(np.argmax(hist_x),bin_size): hist_max = hist_y[ii] if hist_x[ii]<percent_cutoff*np.max(hist_x): break # scatter points where objects exist ax3[1].plot(x[can>hist_max],y[can>hist_max],marker='.',linestyle='', label='Scatter Above 1% Dropoff') ax3[1].set_xlim(np.min(x),np.max(x)) ax3[1].set_ylim(np.min(y),np.max(y)) ax3[1].legend() plt.show()

The code above (assuming the user is using the picamera and Python) goes through the following object detection routine:

Take a snapshot using the picamera

Calculate edges using the Canny method

Establish the 1% dropoff region

Include only points above the 1% dropoff region to approximate object regions

In the next section, we can begin to draw boundaries around the objects using clustering methods and hopefully delineate multiple objects and approximate their color.

Now that we have identified the rough boundaries of the objects in our images, we can investigate methods for clustering the points and identifying each individual object.

First, the ‘scikit-learn’ module in Python needs to be installed. It contains the machine learning algorithms that will be essential for clustering data points and approximating each object bounding box. Scikit-learn can be downloaded using the standard Raspberry Pi Python install method:

pi@raspberrypi:~ $ sudo pip3 install -U scikit-learn

Once the Scikit-learn module is installed, we can import it and investigate the DBSCAN method (Scikit-learn DBSCAN webpage). DBSCAN will sift through the points and separate ‘objects’ based on the proximity between points. An example of DBSCAN is shown below, which will return the groups of points associated with each object.

import time from picamera import PiCamera import scipy.ndimage as scimg import numpy as np import matplotlib.pyplot as plt from matplotlib.collections import PatchCollection from matplotlib.patches import Rectangle from sklearn.cluster import DBSCAN # picamera setup h = 640 #largest resolution length cam_res = (int(h),int(0.75*h)) # resizing to picamera's required ratios cam_res = (int(32*np.floor(cam_res[0]/32)),int(16*np.floor(cam_res[1]/16))) cam = PiCamera(resolution=cam_res) # preallocating image variables data = np.empty((cam_res[1],cam_res[0],3),dtype=np.uint8) x,y = np.meshgrid(np.arange(cam_res[0]),np.arange(cam_res[1])) # different edge detection methods cam.capture(data,'rgb') # capture image # Canny method without angle t1 = time.time() gaus = scimg.fourier_gaussian(data[:,:,0],sigma=0.01) can_x = scimg.prewitt(gaus,axis=0) can_y = scimg.prewitt(gaus,axis=1) can = np.hypot(can_x,can_y) # pulling out object edges fig3,ax3 = plt.subplots(2,1,figsize=(10,7)) ax3[0].pcolormesh(x,y,can,cmap='gray') bin_size = 100 # total bins to show percent_cutoff = 0.02 # cutoff once main peak tapers to 1% of max hist_vec = np.histogram(can.ravel(),bins=bin_size) hist_x,hist_y = hist_vec[0],hist_vec[1] for ii in range(np.argmax(hist_x),bin_size): hist_max = hist_y[ii] if hist_x[ii]<percent_cutoff*np.max(hist_x): break # sklearn section for clustering x_cluster = x[can>hist_max] y_cluster = y[can>hist_max] scat_pts = [] for ii,jj in zip(x_cluster,y_cluster): scat_pts.append((ii,jj)) min_samps = 15 leaf_sz = 10 max_dxdy = 25 # clustering analysis for object detection clustering = DBSCAN(eps=max_dxdy,min_samples=min_samps, algorithm='kd_tree', leaf_size=leaf_sz).fit(scat_pts) color_txt = ['Red','Green','Blue'] fig4,ax4 = plt.subplots(1) fig4.set_size_inches(9,7) im_show = ax4.imshow(data,origin='lower') # drawing boxes around individual objects for ii in np.unique(clustering.labels_): if ii==-1: continue clus_dat = np.where(clustering.labels_==ii) x_pts = x_cluster[clus_dat] y_pts = y_cluster[clus_dat] ax3[1].plot(x_pts,y_pts,marker='.',linestyle='',label='Object {0:2.0f}'.format(ii)) ax3[1].legend() fig3.savefig('dbscan_demo.png',dpi=150,facecolor=[252/255,252/255,252/255]) plt.show()

The result of the code above is shown below in the scatter plot which shows different colors delineated to each object:

The Scikit-learn DBSCAN method outputs the four objects as we expect. As one might imagine, this is an incredibly useful tool for machine learning and computer vision applications. Unfortunately, in the case above, the total computation time is quite high, about 4-5s on the Raspberry Pi. We can really narrow this down by lowering the quality of the image and analyzing upscaled images. This will be a task for the next entry, along with rotation algorithms and color identification methods.

In this entry into the image processing series, I introduced edge detection techniques and ultimately implemented the Canny algorithm to detect multiple objects. A histogram of the edge detection was also introduced, which allowed us to approximate the region where object edges emerge and background noise is ignored. These methods furthermore extracted the shapes of multiple objects, which we were able to separate using the machine learning tool DBSCAN. The DBSCAN tool took the general scatter of points and was able to identify each individual object. This series of steps allows machines to pull out multiple objects from an image and attach certain identifiers to each. In the next entry, I will explore the individual objects and apply certain transformations and approximations ranging from re-orienting the objects, approximating each object’s color, and decreasing the computation time by compressing or upscaling the image to increase the real-time rate of analysis capable by the Raspberry Pi.

See More in Image Processing and Raspberry Pi: